Episode 78 - Martech for 2026

Did someone forward this to you? If so, click to subscribe

Welcome to episode 78 of the Retention Blueprint!

On Tuesday, Frans Riermersma and Scott Brinker released the Martech for 2026 report.

It's 127 pages. 38,034 words. 585 diagrams. It's here if you want it.

I’ve distilled the report for those who work in retention and added my own thoughts in 863 words.

📰 Martech for 2026

First up, the whole report is about AI.

AI agents specifically.

And despite what you are reading on LinkedIn, “almost everyone is still in the early innings of figuring this out” p.5.

“Only 23.3% of respondents had agents deployed in full production use cases.” p.5

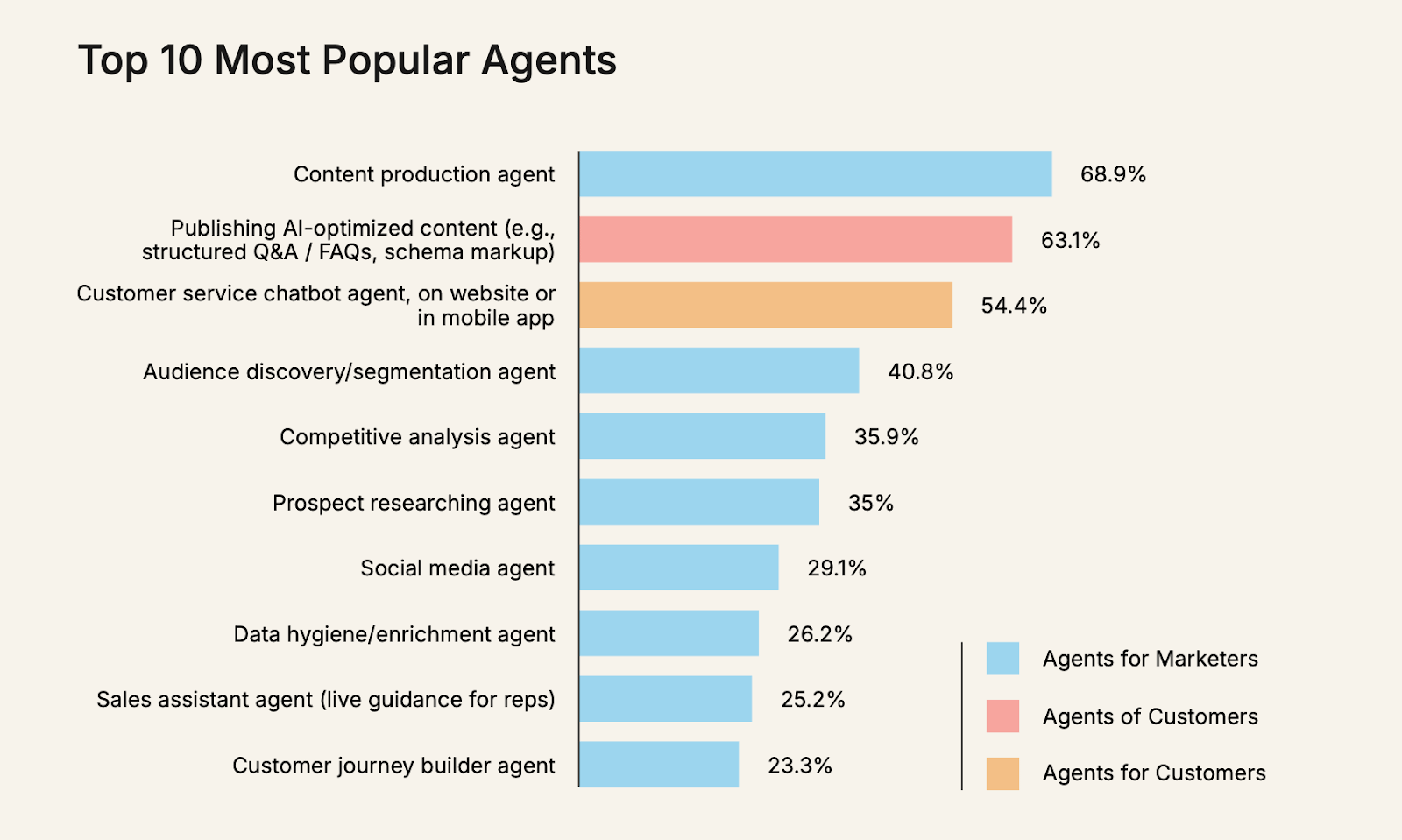

P21

And “62.1% of our AI & Data in Marketing survey respondents said they’re using these built-in agents embedded in their existing martech platforms.” p 34

Scott and Frans are talking about AI agents that perform single, one-off tasks, decisions, or recommendations, e.g., content production or chatbot agents.

This means maturity towards autonomous, goal-based systems capable of completing complex, interrelated tasks is still way off.

According to Forrester, it is about 5-7 years away. I wrote about this here.

This means Scott and Frans' stat that 23.3% of respondents are using AI agents must be taken with a pinch of salt; what it really means is these respondents are using some kind of agentic functionality, but really these are mostly co-pilots.

And it makes clear “We are undeniably in a period where AI is advancing faster than organisations are able to keep pace. If you feel behind that curve, well, you almost certainly are. Because nearly everyone is.” p.62

Data availability remains the biggest blocker.

For those who are implementing these goal-based agents, the biggest barrier to production is “Getting the right data, to the right AI agent, at the right time, is where the magic — and most of the real work — happens.” p7

Many brands still haven't figured out how to get the right data to the right part of the organisation, and this doesn't change with AI agents.

There are moments of truth that make or break customer retention.

Successful deployment of AI agents depends on the ability to bring the right context to each customer touchpoint (p.38).

Scott and Frans argue that AI tech is a commodity; it is not a differentiator, and the ability to provide the right context is what differentiates and will help brands win.

I agree with this to a point, but if you understand the moment of truth, how you respond to it is more important than simply having the context.

And I’d also question whether context truly is differentiation.

Once everyone gets their data in order, it won't be.

What differentiates is how you respond to the interaction based on the context, essentially how you make the customer feel based on their jobs-to-be-done.

This requires strategy and guardrails, set by a human.

Risk 1 - Customer service AI agents

Customer-service agents (read chatbots) now resolve 60%+ of cases (p.24), but the report warns of three retention risks:

Poor data quality or outdated rules → wrong answers

No way to reach a human → frustration

Over-automation for company efficiency → deteriorated customer experience

A key moment of truth is service, and what this actually means is a) how easy it is to resolve my query b) how I feel in the moment.

Chatbots sitting on top of LLMs are far better than the first wave of customer service bots, but the risk remains.

Risk 2 - Consumers using AI assistants

Consumers increasingly use AI assistants such as ChatGPT, Perplexity, and Gemini for discovery, evaluation, and even purchase (p. 28–29) - 50% according to the report.

This means:

AEO (Answer Engine Optimisation) so your brand shows up correctly in AI responses (p.30–31).

Machine-readable context for agents (feeds, schemas, llms.txt, agents.json).

“AirOps, Bluefish, Daydream, Evertune, Profound, and Scrunch are examples of some of the innovative new AEO products gaining adoption with marketers.” (p.30)

Risk 3 - Missed assumptions about AI

AI is non-deterministic (p.11). Deterministic workflows are predictable.

For retention-critical interactions (cancellations, complaints, failures), the report recommends hybrid approaches: AI for interpretation, deterministic rules for escalation and guardrails.

Risk 4 - AI sales reps

The report highlights a vicious cycle of AI sales reps (p.25–26):

Volume increases

Trust erodes

Customers tune out

Brands push harder

This applies to lifecycle comms too.

Over-automated sales or retention messaging → trained disengagement → higher brand avoidance/churn.

The point here is you must earn the right to talk, not just talk more.

Risk 5- AI is the new threat to email

“The leading email clients from Apple, Google, and Yahoo are already experimenting with AI to reshape what a recipient sees in their inbox. Not just filtering and routing messages. But overwriting a marketer’s crafted preview text — or even subject line — with their own AI-generated previews and summaries of emails.” (p 32)

AI-driven synthetic customers might be the answer to some of these risks

Synthetic/digital twin populations (p.20) let you:

Test retention interventions

Pre-run journey flows

Assess emotional responses

Explore reason-for-churn hypotheticals

Examples of products in this category include Brox, Delve.ai, Evidenza, OpinioAI, Panoplai, and Synthetic Users (p24)

Until next week,

Tom

P.S. What did you think of this episode? |

Reply